AdventureTube CI/CD Pipeline Documentation

Introduction to the AdventureTube CI/CD Pipeline

When building the AdventureTube CI/CD pipeline, I need to address three key questions to ensure an efficient and scalable build process.

Ignoring these aspects would result in unnecessary complexity, slowing down development and deployment.

To create a clean and streamlined pipeline, I must clarify these challenges from the outset.

First, I need to determine how to manage multiple build environments and configurations for the AdventureTube CI/CD pipeline.

This involves handling the configurations of six to seven microservice modules effectively.

Without a well-structured approach, managing these configurations across various environments could become cumbersome and prone to errors.

Additionally, all these configurations must work across three different environments: local development, deployment to a Raspberry Pi for testing after containerization, and eventual migration to the Imagine Service.

If this transition process is not designed correctly, it could lead to an overly complex workflow requiring extensive manual effort.

A well-planned approach is necessary to ensure smooth deployments from local development to production.

The second question is how to establish an ideal build process.

If I can effectively manage configurations across different environments, I can create a streamlined, efficient, and reliable build pipeline.

Reducing the time spent on containerization and debugging is crucial.

One of the biggest challenges arises when testing microservices after post-containerization.

While containerization and microservices offer great advantages, they also introduce complexity, especially when debugging services across different environments.

My goal is to minimize these challenges while maximizing the benefits of this architecture.

Additionally, testing has become a major focus for me.

In recent months, I’ve prioritized automating repetitive tasks, particularly API testing.

The AdventureTube project heavily relies on API communication between mobile devices and the backend system, requiring extensive testing to validate API requests from the mobile side.

Performing these tests manually would be time-consuming and inefficient.

To streamline this process, I plan to use Postman for API calls while automating test execution using AI-driven workflows such as n8n and its Web API capabilities.

This approach will allow me to automate all API tests efficiently, ensuring a more reliable and scalable testing process.

I will update here once i made a progress .

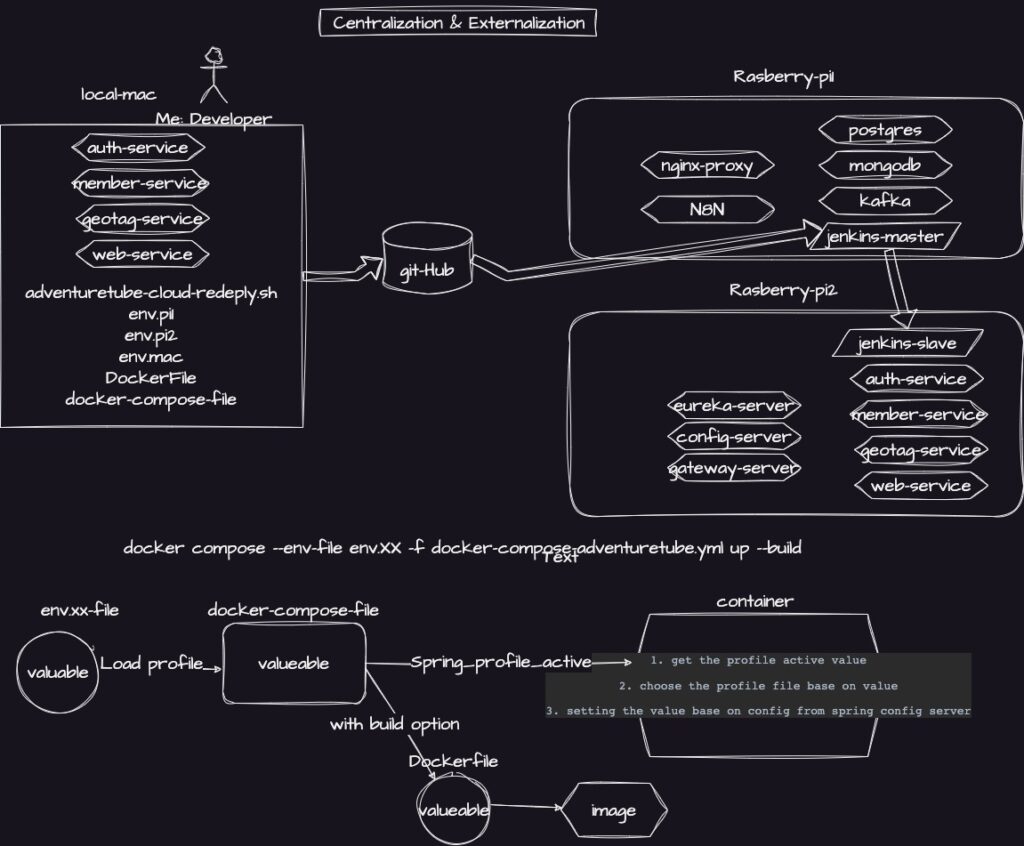

First: How Multiple Build Environments and Configurations are Managed in the AdventureTube CI/CD Pipeline

In the AdventureTube CI/CD pipeline, managing multiple build environments is essential for maintaining a seamless deployment process across different setups.

This system is built on two core concepts: centralized configuration and externalization.

The first concept, centralized configuration, allows me to consolidate all configurations into a single location rather than distributing them across individual sub-modules. Each sub-module typically has its own XML or YAML configuration file, containing nothing but pointing spring config server

that have actual configuration .

By utilizing Spring Cloud Config, I can store and manage configurations for all sub-modules in one central repository.

This approach significantly reduces the risk of human error and ensures that every service pulls its configurations from a unified source.

The second concept, externalization, is implemented using Java ENV dependencies, which allow me to manage environment-specific variables efficiently. Instead of hardcoding configurations within services, I use environment variable (ENV) files at a higher level, specifically within the Docker Compose environment.

This structure ensures that configurations are dynamically assigned based on the environment in which the service is running.

When executing Docker commands with the --env-file option, I can explicitly define which environment file to use.

Currently, I maintain four environment files:

env.macfor local development on macOSenv.pifor testing on Raspberry Pienv.pi2for a secondary Pi testing environmentenv.prodfor production deployment

Each file contains the necessary configuration for its respective environment, eliminating the need to hardcode variations directly within Docker Compose.

By selecting the appropriate file, the necessary configurations are automatically applied at runtime.

One of the most important variables in this setup is SPRING_PROFILES_ACTIVE, which determines which environment profile Spring will load before initialization.

This setup ensures that the correct configuration files are applied depending on whether the service is running in a local development environment,

on a Raspberry Pi for QA testing, or in a production-ready cloud environment.

To streamline this process,

I implemented a customEnvironmentPostProcessor class, which reads the active profile variable from Docker Compose and loads the corresponding environment file before Spring initializes.

This ensures that the appropriate configurations are set even before the Spring application starts.

The ENV file, Docker Compose, and Dockerfile all rely on this mechanism to determine the correct configurations at build time.

This entire setup is designed to create a single, centralized configuration system, where an environment file dictates every aspect of the build process, from the Docker container setup to the final Spring initialization.

Second: What is the Ideal Build Process in the AdventureTube CI/CD Pipeline?

The build process in the AdventureTube CI/CD pipeline consists of three key components:

the development environment,

deployment to the QA server,

and production deployment.

The development environment refers to the local build process, where I work on my Mac without containerizing the microservices as submodules.

This approach simplifies development and debugging while also preserving computing power for more critical tasks.

Running and maintaining containers locally is unnecessary for development, so I focus on direct source code execution instead.

The second component is deployment to the QA server using a jenkins master /slave mode and shell script that managed all docker compose process , where the application is tested in a controlled environment.

Once I make updates to the source code from local after local test , I push these changes to GitHub, which triggers a push notification to my Jenkins master.

The master then instructs the Jenkins slave to pull the new source code from GitHub, build a new image, and run the updated container while cleaning up the old container.

This ensures that the QA environment always runs the latest build.

The third component is production deployment, which has not yet been fully implemented.

For now, the Raspberry Pi system will act as the production environment. After the source code is committed and pushed to GitHub, the container in the QA environment and the local container system should have identical source code.

This consistency allows me to debug remotely using specific ports assigned to different modules.

This setup enables me to debug all microservice’s sub modules using my local Mac system while also allowing remote debugging of the Raspberry Pi when the application is running inside Docker containers.

It is essential to be able to debug locally when running Java packages,

but it is equally important to access and debug the Raspberry Pi environment while the packages run inside containers.

This flexibility is a crucial aspect of my build system, as it accounts for the differences between the local development environment and the QA environment.